Generalizable Humanoid Manipulation with 3D Diffusion Policies

With data from only one scene, our humanoid robot automonously performs skills in the wild open world. All skills in videos are autonomous. Videos are 4x speed.

With data from only one scene, our humanoid robot automonously performs skills in the wild open world. All skills in videos are autonomous. Videos are 4x speed.

1Stanford University 2Simon Fraser University 3UPenn 4UIUC 5CMU

Humanoid robots capable of autonomous operation in diverse environments have long been a goal for roboticists. However, autonomous manipulation by humanoid robots has largely been restricted to one specific scene, primarily due to the difficulty of acquiring generalizable skills and the expensiveness of in-the-wild humanoid robot data. In this work, we build a real-world robotic system to address this challenging problem. Our system is mainly an integration of 1) a whole-upper-body robotic teleoperation system to acquire human-like robot data, 2) a 25-DoF humanoid robot platform with a height-adjustable cart and a 3D LiDAR sensor, and 3) an improved 3D Diffusion Policy learning algorithm for humanoid robots to learn from noisy human data. We run more than 2000 episodes of policy rollouts on the real robot for rigorous policy evaluation. Empowered by this system, we show that using only data collected in one single scene and with only onboard computing, a full-sized humanoid robot can autonomously perform skills in diverse real-world scenarios.

Our system mainly consists of four parts: the humanoid robot platform, the data collection system, the learning method, and the real-world deployment. For the learning part, we develop the Improved 3D Diffusion Policy (iDP3) as a visuomotor policy for general-purpose robots. To collect data from humans, we leverage Apple Vision Pro to build a whole-upper-body teleoperation system.

We use the Apple Vision Pro (AVP) to teleoperate the robot's upper body, which provides precise tracking of the human hand, wrist, and head poses. The robot uses Relaxed IK to follow these poses accurately. We stream the robot's vision back to the AVP. Differing from other works, we enable the waist DoF and gain a more flexible workspace.

3D visuomotor policies are inherently dependent on precise camera calibration and fine-grained point cloud segmentation,

which limits their deployment on mobile platforms such as humanoid robots.

3D visuomotor policies are inherently dependent on precise camera calibration and fine-grained point cloud segmentation,

which limits their deployment on mobile platforms such as humanoid robots.

We introduce the Improved 3D Diffusion Policy (iDP3), a novel 3D visuomotor policy that eliminates these constraints and thus enables its usage on general-purpose robots.

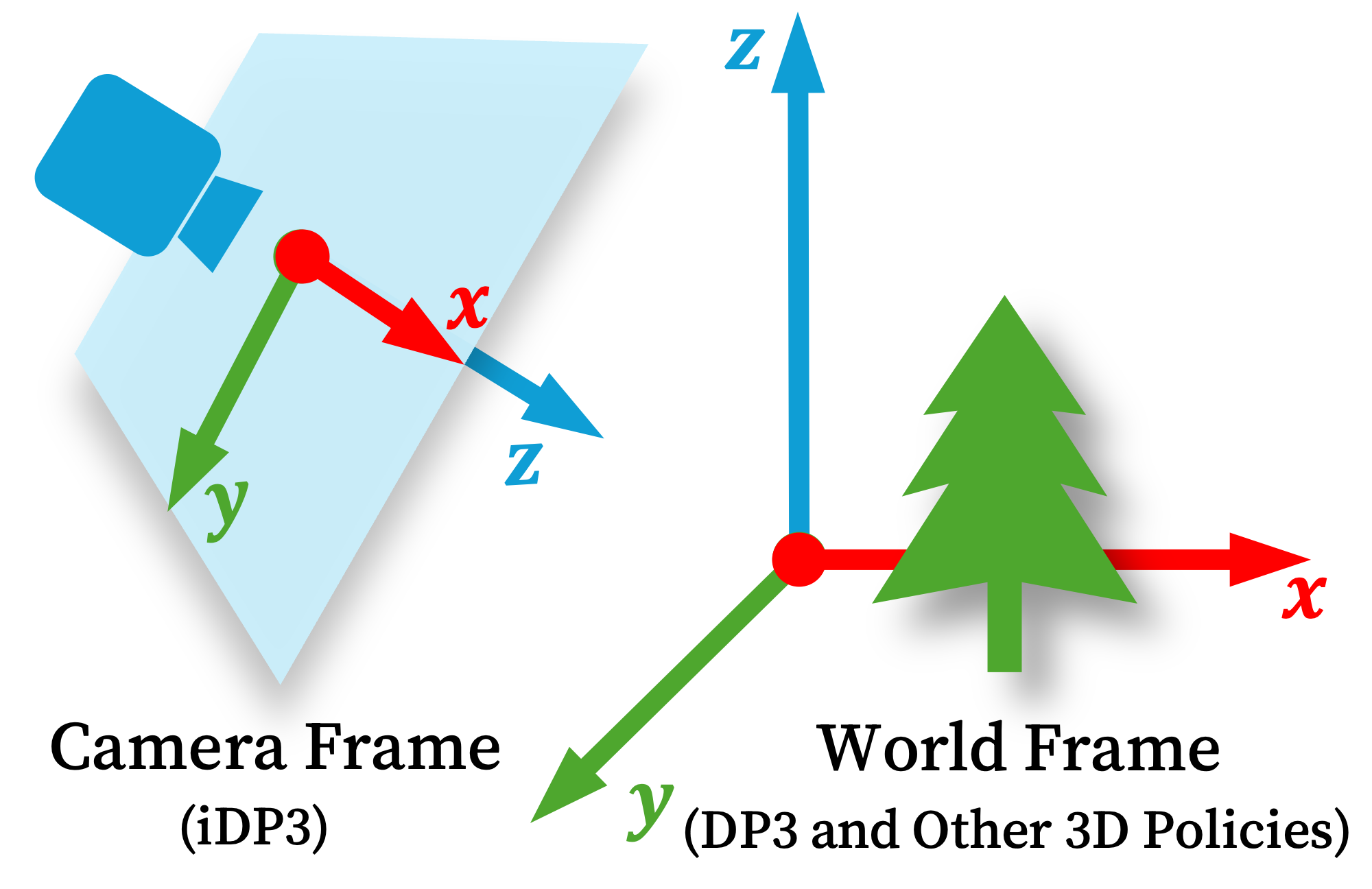

iDP3 leverages egocentric 3D visual representations, which lie in the camera frame, instead of the world frame as in the 3D Diffusion Policy and other 3D policies.

Leveraging egocentric 3D visual

representations presents challenges in eliminating redundant

point clouds, such as backgrounds or tabletops, especially

without relying on foundation models. To mitigate this issue, we scale up the vision input to capture the entire scene.

Our egocentric 3D representations demonstrate impressive view invariance. As shown below, iDP3 consistently grasps objects even under large view changes, while Diffusion Policy (with finetuned R3M and data augmentation) struggles to grasp even the training objects. Diffusion Policy shows occasional success only with minor view changes. Notably, unlike other works to achieve view generalization, we did not incorporate specific designs for equivariance or invariance.

We evaluated several new objects. While Diffusion Policy, due to the use of Color Jitter augmentation, can occasionally handle some unseen objects, it does so with a very low success rate. In contrast, iDP3 naturally handles a wide range of objects, thanks to its use of 3D representations.

We find that iDP3 generalizes to a wide range of real-world unseen environments, with robust and smooth behavior, while Diffusion Policy presents very jittering behavior in the new scene, and even fails to grasp the training object.

We visualize our egocentric 3D representations, together with the corresponding images. These videos also highlight the complexity of diverse real-world scenes.

This work presents a real-world imitation learning system that enables a full-sized humanoid robot to generalize practical manipulation skills to diverse real-world environments, trained with data collected solely in one single scene. With more than 2000 rigorous evaluation trials, we present an improved 3D Diffusion Policy, that can learn robustly from human data and perform effectively on our humanoid robot. The results that our humanoid robot can perform autonomous manipulation skills in diverse real-world scenes show the potential of using 3D visuomotor policies in real-world manipulation tasks with data efficiency.

(1) Teleoperation with Apple Vision Pro is easy to set up, but it is tiring for human teleoperators, making imitation data hard to scale up within the research lab.

(2) The depth sensor still produces noisy and inaccurate point clouds, limiting the performance of iDP3.

(3) Collecting fine-grained manipulation skills, such as turning a screw, is time-consuming due to teleoperation with AVP; systems like Aloha~\cite{zhao2023aloha} are easier to collect dexterous manipulation tasks at this stage.

(4) We avoided using the robot's lower body, as maintaining balance is still challenging due to the hardware constraints brought by current humanoid robots.

In general, scaling up high-quality manipulation data is the main bottleneck. In the future, we hope to explore how to scale up the training of 3D visuomotor policies with more high-quality data and how to employ our 3D visuomotor policy learning pipeline to humanoid robots with whole-body control.

@article{ze2024humanoid_manipulation,

title = {Generalizable Humanoid Manipulation with 3D Diffusion Policies},

author = {Yanjie Ze and Zixuan Chen and Wenhao Wang and Tianyi Chen and Xialin He and Ying Yuan and Xue Bin Peng and Jiajun Wu},

year = {2024},

journal = {arXiv preprint arXiv:2410.10803}

}